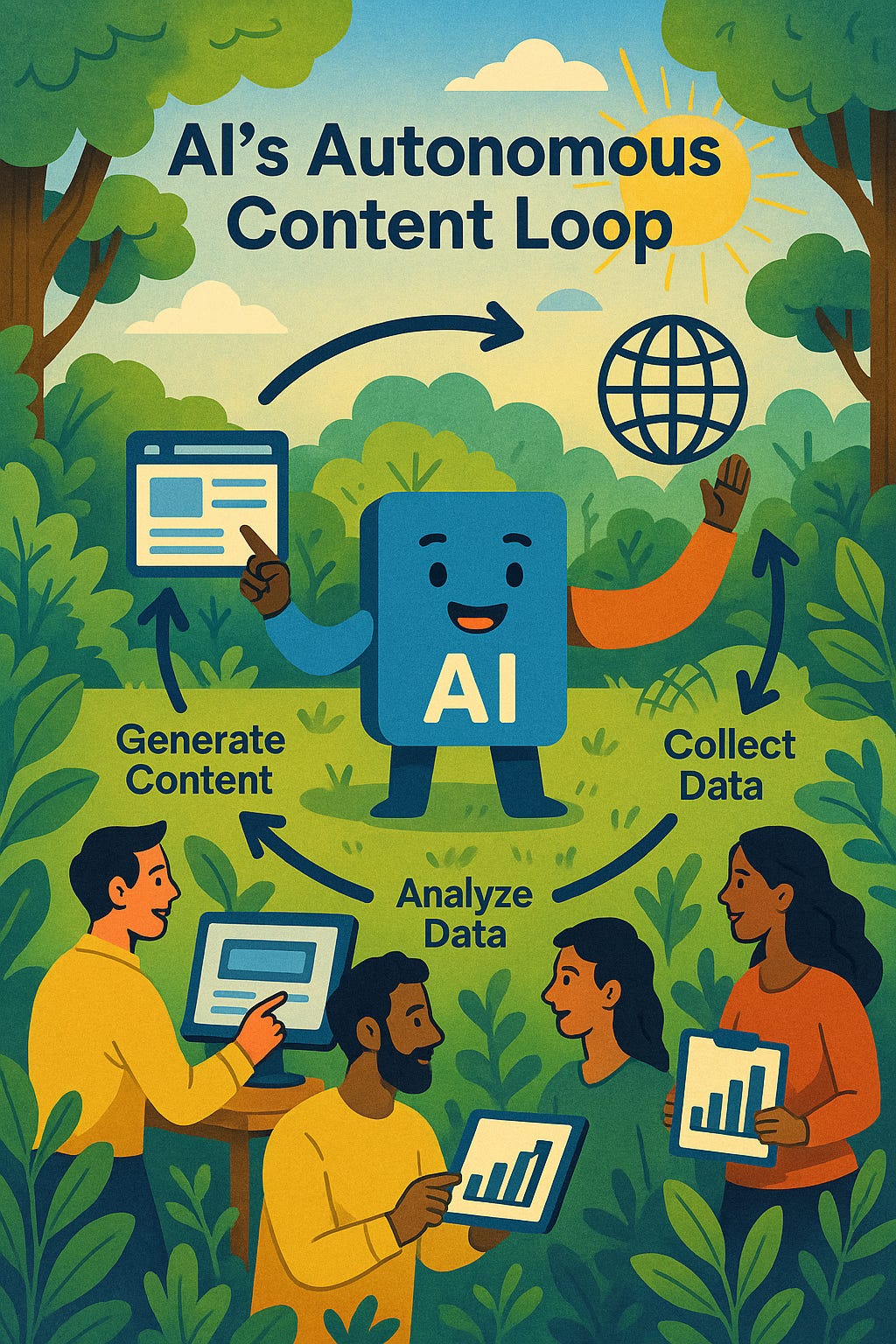

AI's Autonomous Content Loop

Person A has an idea. Person A asks AI to turn that idea into a written product (an article, email, or social media post).

Person B then asks AI to explain what Person A wrote, clearly and concisely, so the key points come through with minimal time investment.

This scenario happens every day, and it's becoming more common, more polished, and more professional.

But something fascinating is happening here: AI-to-AI communication. The idea passes through AI just like data moves across the internet, from an encoder to a decoder in streams of information.

There's no shortage of similar precedents. Think of the internet, banking, algorithmic trading, crypto. So what's new?

What's new is that this isn't just about form but about content: Not just how something is transmitted, but what is said, how it's phrased, and what meaning comes out of it.

We're witnessing the emergence of a process where knowledge is created and consumed by systems. And we're becoming strategic managers of that process.

The Loop Is Already Working

This is already happening. Right now:

AI generates synthetic training data to train other AI models, creating entire datasets without human input

One AI writes a news report from structured data, another AI summarizes it, and a third AI uses that summary to make automated trading decisions

AI creates marketing copy, feeds it to translation AI, which feeds to sentiment analysis AI, which feeds to ad placement algorithms

Chatbots generate customer service responses that get fed into other AI systems to update knowledge bases and training data

We're seeing what researchers call "chained AI processes." Intelligent workflows where one AI's output becomes another AI's input, creating autonomous information pipelines that operate at superhuman scales.

The Efficiency Revolution

The benefits are remarkable: unprecedented speed, scale, and sophistication. Financial algorithms can process market signals in microseconds. News analysis happens in real-time across global sources. Educational content personalizes instantly to millions of individual learning styles.

This creates what I'd call the "cognitive multiplication effect." AI systems amplify human intelligence rather than replace it. The key is understanding when to lean in and when to maintain oversight.

Like any powerful tool, these systems work best when we understand their capabilities and limits. Sure, a mistranslation could cascade through a chain, or a biased dataset could skew downstream decisions. But these are engineering challenges, not fundamental flaws.

What We're Actually Gaining

This shift represents an extraordinary expansion of human cognitive capacity.

Cognitive augmentation is freeing us from routine information processing. Instead of spending hours reading reports, we can focus on interpretation, strategy, and creative problem-solving.

Enhanced pattern recognition lets us spot insights across vast datasets that would be impossible for humans to process manually.

The Skills Evolution

Rather than replacing human capabilities, this is reshaping which skills matter most.

The way we work is changing.

Working with AI is now just as important as knowing a technical skill. But using AI well means knowing how to judge its output—that’s the new kind of literacy.

Since AI can handle much of the execution, your value lies in setting smart goals and thinking strategically. The parts AI can’t replace—like creativity and judgment—are where humans still lead.

The most successful people and organizations are learning to dance with AI, not fight it.

What This Means for the Future

Is human understanding becoming unnecessary? Not at all. It's becoming more strategic. We're moving from processing information to directing intelligence.

Is thinking just issuing commands to a machine? Thinking is evolving to include designing systems, setting objectives, and interpreting results. Higher-order cognitive work.

Will learning happen without actual learning? Learning is shifting toward meta-skills: how to ask better questions, evaluate sources, and synthesize insights across domains.

The Opportunity Ahead

This could be profoundly liberating. Less time on routine cognitive tasks means more time for value judgments, creative work, relationship building, and tackling complex problems that require human insight.

The challenges are real but manageable. We need to stay engaged with the underlying material, maintain our critical thinking muscles, and ensure broad access to these powerful tools. Without thoughtful design, we risk creating what some call a "cognitive oligarchy" where a small group controls the AI systems that shape how everyone else thinks and learns.

The key insight is that we're not becoming obsolete. In my opinion, we’re merely becoming cognitive architects.

Designing the Future

We're at an exciting inflection point. The autonomous content loop is already spinning, processing information at scales that multiply human capacity. The question isn't whether this will continue but how we'll design systems that amplify human potential.

The most interesting opportunities:

Building AI literacy that empowers people to be effective collaborators

Creating human-AI workflows that combine the best of both intelligences

Developing safeguards that maintain quality while enabling speed

Fostering new expertise in directing and interpreting AI systems

The Choice Ahead

The future of human intelligence isn't about competing with AI. It's about conducting it. Like a conductor with an orchestra, our role is shifting from playing every instrument to creating harmony across the ensemble.

This could be the beginning of a remarkable expansion of human capability, where we focus on what we do best while AI handles what it does best.

We created the loop. Now the loop is creating everything else.

This article presents a compelling exploration of the increasingly recursive nature of AI-driven content ecosystems. The idea that content can now be generated, consumed, and reinterpreted by autonomous systems without direct human input is both fascinating and deeply consequential. I especially appreciate how the piece highlights the feedback loops—where AI not only creates content but also learns from its own outputs—which raises important questions about originality, context degradation, and semantic drift.

And why do we need humans if AIs will interact with each other? If AIs completely replace humans, humanity will simply die out like dinosaurs.